Understanding How PPC Affects SEO

There’s a lot of debate about how an organization should use organic keyword marketing versus

the way those same organizations should use PPC marketing. And there seem to be two (and possibly

three) distinct camps about what should and shouldn’t happen with these different types of

marketing.

The first position is that PPC programs can hurt organic keyword programs. According to subscribers

to this position, PPC programs damage organic rankings because the act of paying for a listing automatically

reduces the rank of your organic keyword efforts. Those who follow this theory believe that

there is no place for PPC programs.

Another position in this argument is that PPC has no effect at all on SEO. It’s a tough concept to

swallow, because one would naturally believe that any organization paying for a specific rank in

search returns would automatically push organic keyword returns into a lower slot (which supports

the first theory). Those who follow this theory believe that there is no need to invest in PPC,

because you can achieve the same results with organic keywords, though it takes much longer for

those results to become apparent.

The most widely held belief, however, is that a combination of PPC and organic keywords is the best

approach. This theory would seem to have a lot of validity. According to some researchers, PPC programs

tend to be much more effective if an organization also has organic keywords that rank in the

same area as the PPC ranks. For example, if you’ve bid on a keyword that’s consistently placed number

two or three in search engine returns, and you have organic keywords that fall in the next few

slots, you’re likely to find better conversion numbers than either organic keywords or PPC programs

can bring on their own.

It’s important to note here that all search engines make a distinction between PPC and organic SEO.

PPC doesn’t help your organic rankings. Only those activities like tagging your web site properly,

using keyword placement properly, and including great content on your site will help you on the

organic side. PPC is a search marketing strategy.

PPC Is Not Paid Inclusion!

One distinction that is important for you to understand is the difference between PPC and paidinclusion

(PI) services. Many people believe that PPC and PI services are the same type of marketing,

but there can be some subtle differences. For starters, paid-inclusion services are used by

some search engines to allow web-site owners to pay a one-year subscription fee to ensure that their

site is indexed with that search engine all the time. This fee doesn’t guarantee any specific rank in

search engine results; it only guarantees that the site is indexed by the search engine.

Yahoo! is one company that uses paid inclusion to populate its search index. Not all of the listings

in Yahoo! are paid listings, however. Yahoo! combines both normally spidered sites and paid sites.

Many other search engines have staunchly avoided using paid-inclusion services — Ask.com and

Google are two of the most notable — because most users feel that paid inclusion can skew the

search results. In fact, search engines that allow only paid-inclusion listings are not likely to survive

very long, because users won’t use them.

There is a bit of a gray line between paid inclusion and PPC. That line begins at about the point where

both services are paid for. Detractors of these types of programs claim that paying for a listing — any

listing — is likely to make search returns invalid because the theory is that search engines give higher

ranking to paid-inclusion services, just as they do to PPC advertisements.

NOTE Throughout this book, you’ll like see the terms SEO and search marketing used interchangeably.

Very strictly speaking, search marketing and SEO are quite different activities.

Search marketing includes any activity that improves your search engine rankings — paid or organic.

SEO, on the other hand, usually refers to strictly the free, organic methods used to improve search rankings.

Very often, the two terms are used interchangeably by people using SEO and search engine marketing

techniques. SEO and SEM experts, however, will always clearly differentiate the activities.

seo Search Engine Optimization How to seo monney online google adsenes

Thursday, September 30, 2010

Wednesday, September 29, 2010

Pay-per-Click Categories

Pay-per-Click Categories

Pay-per-click programs are not all created equal. When you think of PPC programs, you probably

think of keyword marketing — bidding on a keyword to determine where your site will be placed

in search results. And that’s an accurate description of PPC marketing programs as they apply to

keywords. However, there are two other types of PPC programs, as well. And you may find that

targeting a different category of PPC marketing is more effective than simply targeting keyword

PPC programs.

Keyword pay-per-click programs

Keyword PPC programs are the most common type of PPC programs. They are also the type this

book focuses on most often. By now, you know that keyword PPC programs are about bidding on

keywords associated with your site. The amount that you’re willing to bid determines the placement

of your site in search engine results.

In keyword PPC, the keywords used can be any word or phrase that might apply to your site.

However, remember that some of the most common keywords have the highest competition for

top spot, so it’s not always advisable to assume that the broadest term is the best one. If you’re in

a specialized type of business, a broader term might be more effective, but as a rule of thumb, the

more narrowly focused your keywords are, the better results you are likely to have with them. (And

PPC will cost much less if you’re not using a word that requires a $50 per click bid.)

Did you know that Google and Yahoo! have $100 caps on their keyword bids? They do.

Imagine paying $100 per click for a keyword. Those are the kinds of keywords that will

likely cost you far more money than they will generate for you. It’s best if you stick with keywords

and phrases that are more targeted and less expensive.

The major search engines are usually the ones you think of when you think keyword PPC programs,

and that’s fairly accurate. Search PPC marketing programs such as those offered by vendors like

Google, Yahoo! Search Marketing, and MSN are some of the most well-known PPC programs.

Product pay-per-click programs

You can think of product pay-per-click programs as online comparison shopping engines or price

comparison engines. A product PPC program focuses specifically on products, so you bid on placement

for your product advertisements.

The requirements for using a product PPC program are a little different from keyword PPC programs,

however. With a product PPC, you must provide a feed— think of it as a regularly updated pricelist

for your products — to the search engine. Then, when users search for a product your links are given

prominence, depending on the amount you have bid for placement. However, users can freely display

those product listings returned by the search engine in the order or price from lowest to highest if

that is their preference. This means that your product may get good placement initially, but if it’s not

the lowest-priced product in that category, it’s not guaranteed that your placement results will stay in

front of potential visitors.

Some of these product PPC programs include Shopping.com, NexTag, Pricegrabber, and Shopzilla.

Although product PPC programs are popular for controlling the placement of your

product listings, there are some services, like Google Base, that allow you to list your

products in their search engine for free. These product PPC programs still require a product feed,

however, to keep product listings current.

Implementing a product feed for your products isn’t terribly difficult, although, depending on the

number of products you have, it can be time-consuming. Most of the different product PPC programs

have different requirements for the product attributes that must be included in the product

feed. For example, the basic information included for all products are an item title, the direct link

for the item, and a brief description of the item.

Some of the additional attributes that you may need to include in your product PPC listings include:

title

description

link

image_link

product_type

upc

price

mpn (Manufacturer’s Part Number)

isbn

id

Some product PPC programs will require XML-formatted feeds; however, most will allow text

delimited Excel files (simple CSV files). This means you can create your product lists in an Excel

spreadsheet, and then save that spreadsheet as text delimited by selecting File ➪ Save As and

ensuring the file type selected is text delimited.

Service pay-per-click programs

When users search for a service of any type, such as travel reservations, they are likely to use search

engines related specifically to that type of service. For example, a user searching for the best price

for hotel reservations in Orlando, Florida, might go to TripAdvisor.com. Advertisers, in this case

hotel chains, can choose to pay for their rank in the search results using a service PPC program.

Service PPC programs are similar to product PPC programs with the only difference being the type

of product or service that is offered. Product PPC programs are more focused on e-commerce products,

whereas service PPC programs are focused on businesses that have a specific service to offer.

Service PPC programs also require an RSS feed, and even some of the same attribute listings as product

PPC programs. Some of the service PPC programs you might be familiar with are SideStep.com

and TripAdvisor.com. In addition, however, many product PPC programs have expanded to include

services. One such vendor is NexTag.

In addition to the three categories of PPC programs discussed in this text, there is an

additional one. Pay-per-call is a type of keyword advertising in which search results

include a phone number. Each time a call is connected through that phone number, the company

that owns the number is charged for the advertising, just as if it were paying for a traditional payper-

click service.

Pay-per-click programs are not all created equal. When you think of PPC programs, you probably

think of keyword marketing — bidding on a keyword to determine where your site will be placed

in search results. And that’s an accurate description of PPC marketing programs as they apply to

keywords. However, there are two other types of PPC programs, as well. And you may find that

targeting a different category of PPC marketing is more effective than simply targeting keyword

PPC programs.

Keyword pay-per-click programs

Keyword PPC programs are the most common type of PPC programs. They are also the type this

book focuses on most often. By now, you know that keyword PPC programs are about bidding on

keywords associated with your site. The amount that you’re willing to bid determines the placement

of your site in search engine results.

In keyword PPC, the keywords used can be any word or phrase that might apply to your site.

However, remember that some of the most common keywords have the highest competition for

top spot, so it’s not always advisable to assume that the broadest term is the best one. If you’re in

a specialized type of business, a broader term might be more effective, but as a rule of thumb, the

more narrowly focused your keywords are, the better results you are likely to have with them. (And

PPC will cost much less if you’re not using a word that requires a $50 per click bid.)

Did you know that Google and Yahoo! have $100 caps on their keyword bids? They do.

Imagine paying $100 per click for a keyword. Those are the kinds of keywords that will

likely cost you far more money than they will generate for you. It’s best if you stick with keywords

and phrases that are more targeted and less expensive.

The major search engines are usually the ones you think of when you think keyword PPC programs,

and that’s fairly accurate. Search PPC marketing programs such as those offered by vendors like

Google, Yahoo! Search Marketing, and MSN are some of the most well-known PPC programs.

Product pay-per-click programs

You can think of product pay-per-click programs as online comparison shopping engines or price

comparison engines. A product PPC program focuses specifically on products, so you bid on placement

for your product advertisements.

The requirements for using a product PPC program are a little different from keyword PPC programs,

however. With a product PPC, you must provide a feed— think of it as a regularly updated pricelist

for your products — to the search engine. Then, when users search for a product your links are given

prominence, depending on the amount you have bid for placement. However, users can freely display

those product listings returned by the search engine in the order or price from lowest to highest if

that is their preference. This means that your product may get good placement initially, but if it’s not

the lowest-priced product in that category, it’s not guaranteed that your placement results will stay in

front of potential visitors.

Some of these product PPC programs include Shopping.com, NexTag, Pricegrabber, and Shopzilla.

Although product PPC programs are popular for controlling the placement of your

product listings, there are some services, like Google Base, that allow you to list your

products in their search engine for free. These product PPC programs still require a product feed,

however, to keep product listings current.

Implementing a product feed for your products isn’t terribly difficult, although, depending on the

number of products you have, it can be time-consuming. Most of the different product PPC programs

have different requirements for the product attributes that must be included in the product

feed. For example, the basic information included for all products are an item title, the direct link

for the item, and a brief description of the item.

Some of the additional attributes that you may need to include in your product PPC listings include:

title

description

link

image_link

product_type

upc

price

mpn (Manufacturer’s Part Number)

isbn

id

Some product PPC programs will require XML-formatted feeds; however, most will allow text

delimited Excel files (simple CSV files). This means you can create your product lists in an Excel

spreadsheet, and then save that spreadsheet as text delimited by selecting File ➪ Save As and

ensuring the file type selected is text delimited.

Service pay-per-click programs

When users search for a service of any type, such as travel reservations, they are likely to use search

engines related specifically to that type of service. For example, a user searching for the best price

for hotel reservations in Orlando, Florida, might go to TripAdvisor.com. Advertisers, in this case

hotel chains, can choose to pay for their rank in the search results using a service PPC program.

Service PPC programs are similar to product PPC programs with the only difference being the type

of product or service that is offered. Product PPC programs are more focused on e-commerce products,

whereas service PPC programs are focused on businesses that have a specific service to offer.

Service PPC programs also require an RSS feed, and even some of the same attribute listings as product

PPC programs. Some of the service PPC programs you might be familiar with are SideStep.com

and TripAdvisor.com. In addition, however, many product PPC programs have expanded to include

services. One such vendor is NexTag.

In addition to the three categories of PPC programs discussed in this text, there is an

additional one. Pay-per-call is a type of keyword advertising in which search results

include a phone number. Each time a call is connected through that phone number, the company

that owns the number is charged for the advertising, just as if it were paying for a traditional payper-

click service.

Tuesday, September 28, 2010

How Pay-per-Click Works

How Pay-per-Click Works

Pay-per-click marketing is an advertising method that allows you to buy search engine placement

by bidding on keywords or phrases. There are two different types of PPC marketing.

In the first, you pay a fee for an actual SERP ranking, and in some cases, you also pay a per-click fee

meaning that the more you pay, the higher in the returned results your page will rank.

The second type is more along true advertising lines. This type of PPC marketing involves bidding

on keywords or phrases that appear in, or are associated with, text advertisements. Google is probably

the most notable provider of this service. Google’s AdWords service is an excellent example of

how PPC advertisements work, as is shown in Figure 5-1.

Determining visitor value

So the first thing that you need to do when you begin considering PPC strategies is to determine

how much each web-site visitor is worth to you. It’s important to know this number, because otherwise

you could find yourself paying far too much for keyword advertising that doesn’t bring the traffic

or conversions that you’d expect. For example, if it costs you $25 to gain a conversion (or sale)

but the value of that conversion is only $15, then you’re losing a lot of money. You can’t afford that

kind of expenditure for very long.

To determine the value of each web-site visitor, you’ll need to have some historical data about the

number of visitors to your site in a given amount of time (say a month) and the actual sales numbers

(or profit) for that same time period. This is where it’s good to have some kind of web metrics

program to keep track of your site statistics. Divide the profit by the number of visitors for the same

time frame, and the result should tell you (approximately) what each visitor is worth.

Say that during December, your site cleared $2,500. (In this admittedly simplified example, we’re

ignoring various things you might have to figure into an actual profit and loss statement.) Let’s also

say that during the same month, 15,000 visitors came to your site. Note that this number is for all

the visitors to your site, not just the ones who made a purchase. You divide your $2,500 profit by

all visitors, purchasers or not, because this gives you an accurate average value of every visitor to

your site. Not every visitor is going to make a purchase, but you have to go through a number of

non-purchasing visitors to get to those who will purchase.

Back to the formula for the value of a visitor. Divide the site profit for December ($2,500) by the

number of visitors (15,000) and the value of your visitors is approximately $.17 per visitor. Note

that I’ve said approximately, because during any given month (or whatever time frame you choose)

the number of visitors and the amount of profit will vary. The way you slice the time can change

your average visitor value by a few cents to a few dollars, depending on your site traffic. (Also note

that the example is based on the value of all visitors, not of conversions, which might be a more valid

real-life way of calculating the value of individual visitors. But this example is simply to demonstrate

the principle.)

The number you got for visitor value is a sort of breakeven point. It means you can spend up to

$.17 per visitor on keywords or other promotions without losing money. But if you’re spending

more than that without increasing sales and profits, you’re going in the hole. It’s not good business

to spend everything you make (or more) to draw visitors to the site. But note the preceding italicized

words. If a $.25 keyword can raise your sales and profits dramatically, then it may be worth

buying that word. In this oversimplified example, you need to decide how much you can realistically

spend on keywords or other promotions. Maybe you feel a particular keyword is powerful

enough that you can spend $.12 per click for it, and raise your sales and visitor value substantially.

You have to decide what profit margin you want and what promotions are likely to provide it. As

you can see, there are a number of variables. Real life is dynamic, and eludes static examples. But

whatever you decide, you shouldn’t spend everything you make on PPC programs. There are far

too many other things that you need to invest in. Popular keyword phrases can often run much more than $.12 per click. In fact, some of the most

popular keywords can run as much as $50 (yes, that’s Fifty Dollars) per click. To stretch your PPC

budget, you can choose less popular terms that are much less expensive, but that provide good

results for the investment that you do make.

Putting pay-per-click to work

Now that you have your average visitor value, you can begin to look at the different keywords on

which you might bid. Before you do, however, you need to look at a few more things. One of the

top mistakes made with PPC programs is that users don’t take the time to clarify what it is they

hope to gain from using a PPC service. It’s not enough for your PPC program just to have a goal

of increasing your ROI (return on investment). You need something more quantifiable than just

the desire to increase profit. How much would you like to increase your profit? How many visitors

will it take to reach the desired increase?

Let’s say that right now each visit to your site is worth $.50, using our simplified example, and your

average monthly profit is $5,000. That means that your site receives 10,000 visits per month. Now

you need to decide how much you’d like to increase your profit. For this example, let’s say that you

want to increase it to $7,500. To do that, if each visitor is worth $.50, you would need to increase

the number of visits to your site to 15,000 per month. So, the goal for your PPC program should be

“To increase profit $2,500 by driving an additional 5,000 visits per month.” This gives you a concrete,

quantifiable measurement by which you can track your PPC campaigns.

Once you know what you want to spend, and what your goals are, you can begin to look at the different

types of PPC programs that might work for you. Although keywords are the main PPC element

associated with PPC marketing, there are other types of PPC programs to consider as well.

How Pay-per-Click Works

Pay-per-click marketing is an advertising method that allows you to buy search engine placement

by bidding on keywords or phrases. There are two different types of PPC marketing.

In the first, you pay a fee for an actual SERP ranking, and in some cases, you also pay a per-click fee

meaning that the more you pay, the higher in the returned results your page will rank.

The second type is more along true advertising lines. This type of PPC marketing involves bidding

on keywords or phrases that appear in, or are associated with, text advertisements. Google is probably

the most notable provider of this service. Google’s AdWords service is an excellent example of

how PPC advertisements work, as is shown in Figure 5-1.

Determining visitor value

So the first thing that you need to do when you begin considering PPC strategies is to determine

how much each web-site visitor is worth to you. It’s important to know this number, because otherwise

you could find yourself paying far too much for keyword advertising that doesn’t bring the traffic

or conversions that you’d expect. For example, if it costs you $25 to gain a conversion (or sale)

but the value of that conversion is only $15, then you’re losing a lot of money. You can’t afford that

kind of expenditure for very long.

To determine the value of each web-site visitor, you’ll need to have some historical data about the

number of visitors to your site in a given amount of time (say a month) and the actual sales numbers

(or profit) for that same time period. This is where it’s good to have some kind of web metrics

program to keep track of your site statistics. Divide the profit by the number of visitors for the same

time frame, and the result should tell you (approximately) what each visitor is worth.

Say that during December, your site cleared $2,500. (In this admittedly simplified example, we’re

ignoring various things you might have to figure into an actual profit and loss statement.) Let’s also

say that during the same month, 15,000 visitors came to your site. Note that this number is for all

the visitors to your site, not just the ones who made a purchase. You divide your $2,500 profit by

all visitors, purchasers or not, because this gives you an accurate average value of every visitor to

your site. Not every visitor is going to make a purchase, but you have to go through a number of

non-purchasing visitors to get to those who will purchase.

Back to the formula for the value of a visitor. Divide the site profit for December ($2,500) by the

number of visitors (15,000) and the value of your visitors is approximately $.17 per visitor. Note

that I’ve said approximately, because during any given month (or whatever time frame you choose)

the number of visitors and the amount of profit will vary. The way you slice the time can change

your average visitor value by a few cents to a few dollars, depending on your site traffic. (Also note

that the example is based on the value of all visitors, not of conversions, which might be a more valid

real-life way of calculating the value of individual visitors. But this example is simply to demonstrate

the principle.)

The number you got for visitor value is a sort of breakeven point. It means you can spend up to

$.17 per visitor on keywords or other promotions without losing money. But if you’re spending

more than that without increasing sales and profits, you’re going in the hole. It’s not good business

to spend everything you make (or more) to draw visitors to the site. But note the preceding italicized

words. If a $.25 keyword can raise your sales and profits dramatically, then it may be worth

buying that word. In this oversimplified example, you need to decide how much you can realistically

spend on keywords or other promotions. Maybe you feel a particular keyword is powerful

enough that you can spend $.12 per click for it, and raise your sales and visitor value substantially.

You have to decide what profit margin you want and what promotions are likely to provide it. As

you can see, there are a number of variables. Real life is dynamic, and eludes static examples. But

whatever you decide, you shouldn’t spend everything you make on PPC programs. There are far

too many other things that you need to invest in. Popular keyword phrases can often run much more than $.12 per click. In fact, some of the most

popular keywords can run as much as $50 (yes, that’s Fifty Dollars) per click. To stretch your PPC

budget, you can choose less popular terms that are much less expensive, but that provide good

results for the investment that you do make.

Putting pay-per-click to work

Now that you have your average visitor value, you can begin to look at the different keywords on

which you might bid. Before you do, however, you need to look at a few more things. One of the

top mistakes made with PPC programs is that users don’t take the time to clarify what it is they

hope to gain from using a PPC service. It’s not enough for your PPC program just to have a goal

of increasing your ROI (return on investment). You need something more quantifiable than just

the desire to increase profit. How much would you like to increase your profit? How many visitors

will it take to reach the desired increase?

Let’s say that right now each visit to your site is worth $.50, using our simplified example, and your

average monthly profit is $5,000. That means that your site receives 10,000 visits per month. Now

you need to decide how much you’d like to increase your profit. For this example, let’s say that you

want to increase it to $7,500. To do that, if each visitor is worth $.50, you would need to increase

the number of visits to your site to 15,000 per month. So, the goal for your PPC program should be

“To increase profit $2,500 by driving an additional 5,000 visits per month.” This gives you a concrete,

quantifiable measurement by which you can track your PPC campaigns.

Once you know what you want to spend, and what your goals are, you can begin to look at the different

types of PPC programs that might work for you. Although keywords are the main PPC element

associated with PPC marketing, there are other types of PPC programs to consider as well.

Monday, September 27, 2010

Pay-per-Click and SEO

Pay-per-Click and SEO

Pay-per-click (PPC) is one of those terms that you hear connected to

keywords so often you might think they were the conjoined twins of

SEO. They’re not, really. Keywords and PPC do go hand in hand, but it

is possible to have keywords without PPC. It’s not always advisable, however.

Hundreds of PPC services are available, but they are not all created equal.

Some PPC services work with actual search rankings, whereas others are

more about text advertisements. Then there are the category-specific PPC

programs, such as those for keywords, products, and services.

The main goal of a PPC program is to drive traffic to your site, but ideally

you want more out of PPC results than just visits. What’s most important is

traffic that reaches some conversion goal that you’ve set for your web site.

To achieve these goal conversions, you may have to experiment with different

techniques, keywords, and even PPC services.

PPC programs have numerous advantages over traditional search engine

optimization:

No changes to a current site design are required. You don’t have

to change any code or add any other elements to your site. All you

have to do is bid on and pay for the keywords you’d like to target.

PPC implementation is quick and easy. After signing up for a PPC

program, it might only take a few minutes to start getting targeted

traffic to your web site. With SEO campaigns that don’t include

PPC, it could take months for you to build the traffic levels that PPC

can build in hours (assuming your PPC campaign is well targeted).

PPC implementation is also quick and easy, and it doesn’t require any

specialized knowledge. Your PPC campaigns will be much better targeted,

however, if you understand keywords and how they work.

As with any SEO strategy, there are limitations. Bidding for keywords can be fierce, with each competitor

bidding higher and higher to reach and maintain the top search results position. Many organizations

have a person or team that’s responsible for monitoring the company’s position in search

results and amending bids accordingly. Monitoring positions is crucial to maintaining good placement,

however, because you do have to fight for your ranking, and PPC programs can become prohibitively

expensive. The competitiveness of the keywords or phrases and the aggressiveness of the competition

determine how much you’ll ultimately end up spending to rank well.

One issue with PPC programs is that many search engines recognize PPC ads as just

that — paid advertisements. Therefore, although your ranking with the search engine

for which you’re purchasing placement might be good, that doesn’t mean your ranking in other

search engines will be good. Sometimes, it’s necessary to run multiple PPC campaigns if you want

to rank high in multiple search engines.

Before you even begin to use a PPC program, you should consider some basics. A very important

point to keep in mind is that just because you’re paying for placement or advertising space associated

with your keywords, you’re not necessarily going to get the best results with all of the keywords

or phrases that you choose. With PPC services you must test, test, and test some more. Begin small,

with a minimum number of keywords, to see how the search engine you’ve selected performs in

terms of the amount of traffic it delivers and how well that traffic converts into paying customers.

An essential part of your testing is having a method in place that allows you to track your return on

investment. For example, if your goal is to bring new subscribers to your newsletter, you’ll want to

track conversions, perhaps by directing the visitors funneled to your site by your PPC link to a subscription

page set up just for them. You can then monitor how many clicks actually result in a goal

conversion (in this case a new subscription). This helps you to quickly track your return on investment

and to determine how much you’re paying for each new subscriber.

Before investing in a PPC service, you may want to review a few different services to determine

which is the best one for you. When doing your preliminary research, take the time to ask the

following questions:

How many searches are conducted each month through the search engine for which

you’re considering a PPC program?

Does the search engine have major partners or affiliates that could contribute to the

volume of traffic you’re likely to receive through the PPC program?

How many searches are generated each month by those partners and affiliates?

What exactly are the terms of service for search partners or affiliates?

How does the search engine or PPC program prevent fraudulent activity?

How difficult is it to file a report about fraudulent activity and how quickly is the issue

addressed (and by whom)?

What recourse do you have if fraudulent activity is discovered?

Do you have control over where your listing does appear? For example, can you choose

not to have your listing appear in search results for other countries where your site is not

relevant? Or can you choose to have your listing withheld from affiliate searches?

When you’re looking at different PPC programs, look for those that have strict guidelines about

how sites appear in listings, how partners and affiliates calculate visits, and how fraud is handled.

These are important issues, because in each case, you could be stuck paying for clicks that didn’t

actually happen. Be sure to monitor any service that you decide to use to ensure that your PPC

advertisements are both working properly and that they seem to be targeted well.

It often takes a lot of testing, monitoring, and redirection to find the PPC program that works well

for you. Don’t be discouraged or surprised if you find that you must try several different programs

or many different keywords before you find the right combination. But through a system of trial

and error and diligent effort, you’ll find that PPC programs can help build your site traffic and goal

conversions faster than you could without the program.

Pay-per-click (PPC) is one of those terms that you hear connected to

keywords so often you might think they were the conjoined twins of

SEO. They’re not, really. Keywords and PPC do go hand in hand, but it

is possible to have keywords without PPC. It’s not always advisable, however.

Hundreds of PPC services are available, but they are not all created equal.

Some PPC services work with actual search rankings, whereas others are

more about text advertisements. Then there are the category-specific PPC

programs, such as those for keywords, products, and services.

The main goal of a PPC program is to drive traffic to your site, but ideally

you want more out of PPC results than just visits. What’s most important is

traffic that reaches some conversion goal that you’ve set for your web site.

To achieve these goal conversions, you may have to experiment with different

techniques, keywords, and even PPC services.

PPC programs have numerous advantages over traditional search engine

optimization:

No changes to a current site design are required. You don’t have

to change any code or add any other elements to your site. All you

have to do is bid on and pay for the keywords you’d like to target.

PPC implementation is quick and easy. After signing up for a PPC

program, it might only take a few minutes to start getting targeted

traffic to your web site. With SEO campaigns that don’t include

PPC, it could take months for you to build the traffic levels that PPC

can build in hours (assuming your PPC campaign is well targeted).

PPC implementation is also quick and easy, and it doesn’t require any

specialized knowledge. Your PPC campaigns will be much better targeted,

however, if you understand keywords and how they work.

As with any SEO strategy, there are limitations. Bidding for keywords can be fierce, with each competitor

bidding higher and higher to reach and maintain the top search results position. Many organizations

have a person or team that’s responsible for monitoring the company’s position in search

results and amending bids accordingly. Monitoring positions is crucial to maintaining good placement,

however, because you do have to fight for your ranking, and PPC programs can become prohibitively

expensive. The competitiveness of the keywords or phrases and the aggressiveness of the competition

determine how much you’ll ultimately end up spending to rank well.

One issue with PPC programs is that many search engines recognize PPC ads as just

that — paid advertisements. Therefore, although your ranking with the search engine

for which you’re purchasing placement might be good, that doesn’t mean your ranking in other

search engines will be good. Sometimes, it’s necessary to run multiple PPC campaigns if you want

to rank high in multiple search engines.

Before you even begin to use a PPC program, you should consider some basics. A very important

point to keep in mind is that just because you’re paying for placement or advertising space associated

with your keywords, you’re not necessarily going to get the best results with all of the keywords

or phrases that you choose. With PPC services you must test, test, and test some more. Begin small,

with a minimum number of keywords, to see how the search engine you’ve selected performs in

terms of the amount of traffic it delivers and how well that traffic converts into paying customers.

An essential part of your testing is having a method in place that allows you to track your return on

investment. For example, if your goal is to bring new subscribers to your newsletter, you’ll want to

track conversions, perhaps by directing the visitors funneled to your site by your PPC link to a subscription

page set up just for them. You can then monitor how many clicks actually result in a goal

conversion (in this case a new subscription). This helps you to quickly track your return on investment

and to determine how much you’re paying for each new subscriber.

Before investing in a PPC service, you may want to review a few different services to determine

which is the best one for you. When doing your preliminary research, take the time to ask the

following questions:

How many searches are conducted each month through the search engine for which

you’re considering a PPC program?

Does the search engine have major partners or affiliates that could contribute to the

volume of traffic you’re likely to receive through the PPC program?

How many searches are generated each month by those partners and affiliates?

What exactly are the terms of service for search partners or affiliates?

How does the search engine or PPC program prevent fraudulent activity?

How difficult is it to file a report about fraudulent activity and how quickly is the issue

addressed (and by whom)?

What recourse do you have if fraudulent activity is discovered?

Do you have control over where your listing does appear? For example, can you choose

not to have your listing appear in search results for other countries where your site is not

relevant? Or can you choose to have your listing withheld from affiliate searches?

When you’re looking at different PPC programs, look for those that have strict guidelines about

how sites appear in listings, how partners and affiliates calculate visits, and how fraud is handled.

These are important issues, because in each case, you could be stuck paying for clicks that didn’t

actually happen. Be sure to monitor any service that you decide to use to ensure that your PPC

advertisements are both working properly and that they seem to be targeted well.

It often takes a lot of testing, monitoring, and redirection to find the PPC program that works well

for you. Don’t be discouraged or surprised if you find that you must try several different programs

or many different keywords before you find the right combination. But through a system of trial

and error and diligent effort, you’ll find that PPC programs can help build your site traffic and goal

conversions faster than you could without the program.

Sunday, September 26, 2010

More About Keyword Optimization

More About Keyword Optimization

There is much more to learn about keywords and pay-per-click programs. In the next chapter,

you learn more about how to conduct keyword research, what pay-per-click programs are, and

how to select the right keywords for your web site. But remember, keywords are just tools to

help you improve your search rankings. When designing your site, the site should be designed

to inform, enlighten, or persuade your site visitor to reach a goal conversion.

And that’s truly what SEO is all about. Keywords may be a major component to your SEO strategies,

but SEO is about bringing in more visitors who reach more goal conversions. Without those

conversions, all of the site visitors in the world don’t really mean anything more than that people

dropped by.

71

There is much more to learn about keywords and pay-per-click programs. In the next chapter,

you learn more about how to conduct keyword research, what pay-per-click programs are, and

how to select the right keywords for your web site. But remember, keywords are just tools to

help you improve your search rankings. When designing your site, the site should be designed

to inform, enlighten, or persuade your site visitor to reach a goal conversion.

And that’s truly what SEO is all about. Keywords may be a major component to your SEO strategies,

but SEO is about bringing in more visitors who reach more goal conversions. Without those

conversions, all of the site visitors in the world don’t really mean anything more than that people

dropped by.

71

Saturday, September 25, 2010

Avoid Keyword Stuffing

Avoid Keyword Stuffing

Keyword stuffing, mentioned earlier in this chapter, is the practice of loading your web pages with

keywords in an effort to artificially improve your ranking in search engine results. Depending on

the page that you’re trying to stuff, this could mean that you use a specific keyword or keyphrase a

dozen times or hundreds of times.

Temporarily, this might improve your page ranking. However, if it does, the improvement won’t last,

because when the search engine crawler examines your site, it will find the multiple keyword uses.

Because search engine crawlers use an algorithm to determine if a keyword is used a reasonable number of times on your site, it will discover very quickly that your site can’t support the number

of times you’ve used that keyword or keyphrase. The result will be that your site is either dropped

deeper into the ranking or (and this is what happens in most cases), it will be removed completely

from search engine rankings.

Keyword stuffing is one more black-hat SEO technique that you should avoid. To keep from

inadvertently crossing the keyword stuffing line, you’ll need to do your “due diligence” when

researching your keywords. And you’ll have to use caution when placing keywords on your web

site or in the meta tagging of your site. Use your keywords only the number of times that it’s

absolutely essential. And if it’s not essential, don’t use the word or phrase simply as a tactic to

increase your rankings. Don’t be tempted. The result of that temptation could be the exact opposite

of what you’re trying to achieve.

Keyword stuffing, mentioned earlier in this chapter, is the practice of loading your web pages with

keywords in an effort to artificially improve your ranking in search engine results. Depending on

the page that you’re trying to stuff, this could mean that you use a specific keyword or keyphrase a

dozen times or hundreds of times.

Temporarily, this might improve your page ranking. However, if it does, the improvement won’t last,

because when the search engine crawler examines your site, it will find the multiple keyword uses.

Because search engine crawlers use an algorithm to determine if a keyword is used a reasonable number of times on your site, it will discover very quickly that your site can’t support the number

of times you’ve used that keyword or keyphrase. The result will be that your site is either dropped

deeper into the ranking or (and this is what happens in most cases), it will be removed completely

from search engine rankings.

Keyword stuffing is one more black-hat SEO technique that you should avoid. To keep from

inadvertently crossing the keyword stuffing line, you’ll need to do your “due diligence” when

researching your keywords. And you’ll have to use caution when placing keywords on your web

site or in the meta tagging of your site. Use your keywords only the number of times that it’s

absolutely essential. And if it’s not essential, don’t use the word or phrase simply as a tactic to

increase your rankings. Don’t be tempted. The result of that temptation could be the exact opposite

of what you’re trying to achieve.

Friday, September 24, 2010

Taking Advantage of Organic Keywords

Taking Advantage of Organic Keywords

We’ve already covered brief information about organic keywords. As you may remember, organic keywords

are those that appear naturally on your web site and contribute to the search engine ranking of

the page. By taking advantage of those organic keywords, you can improve your site rankings without

putting out additional budget dollars. The problem, however, is that gaining organic ranking alone

can take four to six months or longer. To help speed the time it takes to achieve good rankings, many

organizations (or individuals) will use organic keywords in addition to some type of PPC (pay per

click) or pay for inclusion service.

To take advantage of organic keywords, you first need to know what those keywords are. One way

to find out is to us a web-site metric application, like the one that Google provides. Some of these

services track the keywords that push users to your site. When viewing the reports associated with

keywords, you can quickly see how your PPC keywords draw traffic, and also what keywords in

which you’re not investing still draw traffic.

Another way to discover what could possibly be organic keywords is to consider the words that

would be associated with your web site, product, or business name. For example, a writer might

include various keywords about the area in which she specializes, but one keyword she won’t necessarily

want to purchase is the word “writer,” which should be naturally occurring on the site.

The word won’t necessarily garner high traffic for you, but when that word is combined with more

specific keywords, perhaps keywords that you acquire through a PPC service, the organic words

can help to push traffic to your site. Going back to our writer example, if the writer specializes in

writing about AJAX, the word writer might be an organic keyword, and AJAX might be a keyword

that the writer bids for in a PPC service.

Now, when potential visitors use a search engine to search for AJAX writer, the writer’s site has a

better chance of being listed higher in the results rankings. Of course, by using more specific terms

related to AJAX in addition to “writer,” the chance is pretty good that the organic keyword combined

with the PPC keywords will improve search rankings. So when you consider organic keywords, think

of words that you might not be willing to spend your budget on, but that could help improve your

search rankings, either alone or when combined with keywords that you are willing to invest in.

We’ve already covered brief information about organic keywords. As you may remember, organic keywords

are those that appear naturally on your web site and contribute to the search engine ranking of

the page. By taking advantage of those organic keywords, you can improve your site rankings without

putting out additional budget dollars. The problem, however, is that gaining organic ranking alone

can take four to six months or longer. To help speed the time it takes to achieve good rankings, many

organizations (or individuals) will use organic keywords in addition to some type of PPC (pay per

click) or pay for inclusion service.

To take advantage of organic keywords, you first need to know what those keywords are. One way

to find out is to us a web-site metric application, like the one that Google provides. Some of these

services track the keywords that push users to your site. When viewing the reports associated with

keywords, you can quickly see how your PPC keywords draw traffic, and also what keywords in

which you’re not investing still draw traffic.

Another way to discover what could possibly be organic keywords is to consider the words that

would be associated with your web site, product, or business name. For example, a writer might

include various keywords about the area in which she specializes, but one keyword she won’t necessarily

want to purchase is the word “writer,” which should be naturally occurring on the site.

The word won’t necessarily garner high traffic for you, but when that word is combined with more

specific keywords, perhaps keywords that you acquire through a PPC service, the organic words

can help to push traffic to your site. Going back to our writer example, if the writer specializes in

writing about AJAX, the word writer might be an organic keyword, and AJAX might be a keyword

that the writer bids for in a PPC service.

Now, when potential visitors use a search engine to search for AJAX writer, the writer’s site has a

better chance of being listed higher in the results rankings. Of course, by using more specific terms

related to AJAX in addition to “writer,” the chance is pretty good that the organic keyword combined

with the PPC keywords will improve search rankings. So when you consider organic keywords, think

of words that you might not be willing to spend your budget on, but that could help improve your

search rankings, either alone or when combined with keywords that you are willing to invest in.

Thursday, September 23, 2010

What’s the Right Keyword Density?

What’s the Right Keyword Density?

Keyword density is hard to quantify. It’s a measurement of the number of times that your keywords

appear on the page, versus the number of words on a page — a ratio, in other words. So if you have a

single web page that has 1,000 words of text and your keyword appears on that page 10 times (assuming

a single keyword, not a keyword phrase), then your keyword density is 1 percent.

What’s the right keyword density? That’s a question that no one has been able to answer definitively.

Some experts say that your keyword density should be around five to seven percent, and others suggest

that it should be a higher or lower percentage. But no one seems to agree on exactly where it

should be.

Because there’s no hard and fast rule, or even a good rule of thumb, to dictate keyword density, site

owners are flying on their own. What is certain is that using a keyword or set of keywords or phrases

too often begins to look like keyword stuffing to a search engine, and that can negatively impact the

ranking of your site.

See, there you are. Not enough keyword density and your site ranking suffers. Too much keyword

density and your site ranking suffers. But you can at least find out what keyword density your competition

is using by looking at the source code for their pages.

Looking at your competition’s source code is also a good way to find out which keywords

they’re using. The listed keywords should appear in the first few lines of code

(indicated in Figures 4-3 and 4-5).

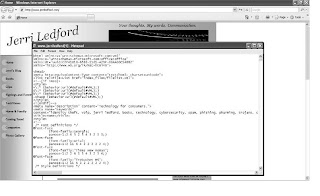

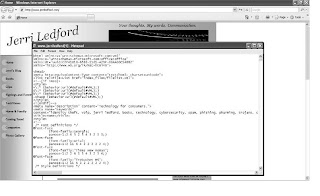

To view the source code of a page if you’re using Internet Explorer, follow these steps:

1. Open Internet Explorer and navigate to the page for which you would like to view the

source code.

2. Click View in the standard toolbar. (In Internet Explorer 7.0, select Page.) The View (or

Page) menu appears, as shown in Figure 4-2.

3. Select View Source and a separate window opens to display the source code from the

web page you’re viewing, as shown in Figure 4-3.

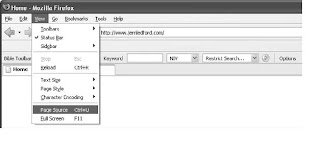

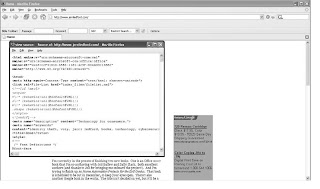

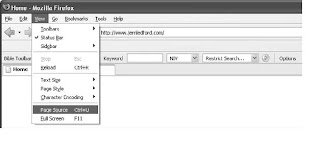

If you’re using the Firefox browser, the menus are slightly different, and the source code looks a

little different. These are the steps for Firefox:

1. Open Firefox and navigate to the page for which you would like to view the source code.

2. Click View in the standard toolbar. The View menu appears, as shown in Figure 4-4.

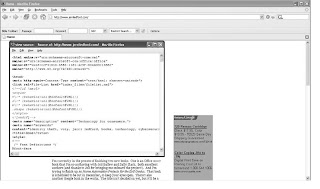

3. Select Page Source to open a separate window that displays the source code for the

web page, as shown in Figure 4-5. Alternatively, you can use the keyboard combination

Ctrl + U to open the source code window.

You may notice that the source code looks a little different in Internet Explorer than it does in

Firefox. The differences are noticeable, but the basic information is all there. That said, it’s not

easy to get through this information. All the text of the page is jumbled in with the page encoding.

It may take some time to decipher, but ultimately, this is the best way to find out not only

what keywords the competition is using, but also how they’re using them, and how often the

keywords appear on their pages.

Keyword density is hard to quantify. It’s a measurement of the number of times that your keywords

appear on the page, versus the number of words on a page — a ratio, in other words. So if you have a

single web page that has 1,000 words of text and your keyword appears on that page 10 times (assuming

a single keyword, not a keyword phrase), then your keyword density is 1 percent.

What’s the right keyword density? That’s a question that no one has been able to answer definitively.

Some experts say that your keyword density should be around five to seven percent, and others suggest

that it should be a higher or lower percentage. But no one seems to agree on exactly where it

should be.

Because there’s no hard and fast rule, or even a good rule of thumb, to dictate keyword density, site

owners are flying on their own. What is certain is that using a keyword or set of keywords or phrases

too often begins to look like keyword stuffing to a search engine, and that can negatively impact the

ranking of your site.

See, there you are. Not enough keyword density and your site ranking suffers. Too much keyword

density and your site ranking suffers. But you can at least find out what keyword density your competition

is using by looking at the source code for their pages.

Looking at your competition’s source code is also a good way to find out which keywords

they’re using. The listed keywords should appear in the first few lines of code

(indicated in Figures 4-3 and 4-5).

To view the source code of a page if you’re using Internet Explorer, follow these steps:

1. Open Internet Explorer and navigate to the page for which you would like to view the

source code.

2. Click View in the standard toolbar. (In Internet Explorer 7.0, select Page.) The View (or

Page) menu appears, as shown in Figure 4-2.

3. Select View Source and a separate window opens to display the source code from the

web page you’re viewing, as shown in Figure 4-3.

If you’re using the Firefox browser, the menus are slightly different, and the source code looks a

little different. These are the steps for Firefox:

1. Open Firefox and navigate to the page for which you would like to view the source code.

2. Click View in the standard toolbar. The View menu appears, as shown in Figure 4-4.

3. Select Page Source to open a separate window that displays the source code for the

web page, as shown in Figure 4-5. Alternatively, you can use the keyboard combination

Ctrl + U to open the source code window.

You may notice that the source code looks a little different in Internet Explorer than it does in

Firefox. The differences are noticeable, but the basic information is all there. That said, it’s not

easy to get through this information. All the text of the page is jumbled in with the page encoding.

It may take some time to decipher, but ultimately, this is the best way to find out not only

what keywords the competition is using, but also how they’re using them, and how often the

keywords appear on their pages.

Wednesday, September 22, 2010

Picking the Right Keywords

Picking the Right Keywords

Keywords are really the cornerstone of any SEO program. Your keywords play a large part in determining

where in search rankings you’ll land, and they also mean the difference between a user’s finding

your page and not. So when you’re picking keywords, you want to be sure that you’ve selected the right ones. The only problem is, how do you know what’s right and what’s not?

Selecting the right keywords for your site means the difference between being a nobody on the Web, and being the first site that users click to when they perform a search. You’ll be looking at two types of keywords. The first is brand keywords. These are keywords associated with your brand. It seems pretty obvious that these keywords are important; however, many people don’t think they need to pay attention to these keywords, because they’re already tied to the site. Not true. Brand keywords are just as essential as the second type, generic keywords.

Generic keywords are all the other keywords that are not directly associated with your company brand. So if your web site, TeenFashions.com, sells teen clothing, then keywords such as clothing,tank tops, cargo pants, and bathing suits might be generic keywords that you could use on your site.Going back to brand keywords for a moment, if you don’t use the keywords contained in your business

name, business description, and specific category of business, you’re missing out. It seems as if

it wouldn’t be necessary to use these keywords, because they’re already associated with your site, but

if you don’t own them, who will? And if someone else owns them, how will they use those keywords

to direct traffic away from your site?

Before we go too much further in this description of keywords and how to choose the right one,

you should know that keywords fall into two other categories: those keywords you pay a fee to

use (called pay-per-click), and those naturally occurring keywords that just seem to work for you

without the need to pay someone to ensure they appear in search results (these are called organic

keywords).

When you think about purchasing keywords, these fall into the pay-per-click category. When you

stumble upon a keyword that works, that falls into the organic category.

When you begin considering the keywords that you’ll use on your site, the best place to start brainstorming

is with keywords that apply to your business. Every business has its own set of buzzwords

that people think about when they think about that business or the products or services related to

the business. Start your brainstorming session with words and phrases that are broad in scope, but

may not bring great search results. Then narrow down your selections to more specific words and

phrases, which will bring highly targeted traffic. Table 4-1 shows how broad keywords compare to

specific key phrases. Chapter 5 contains more specifics about choosing the right keywords and key phrases. The

principal for choosing keywords is the same, whether the words you’re using are in PPC programs

or they occur organically, so all of the elements of keyword selection for both categories

are covered there.

When you’re considering the words that you’ll use for keywords, also consider phrases

of two to three words. Because key phrases can be much more specific than single words,

it’s easier to rank high with a key phrase than with a keyword. Key phrases are used in the same ways

as keywords, they’re just longer.

Keywords are really the cornerstone of any SEO program. Your keywords play a large part in determining

where in search rankings you’ll land, and they also mean the difference between a user’s finding

your page and not. So when you’re picking keywords, you want to be sure that you’ve selected the right ones. The only problem is, how do you know what’s right and what’s not?

Selecting the right keywords for your site means the difference between being a nobody on the Web, and being the first site that users click to when they perform a search. You’ll be looking at two types of keywords. The first is brand keywords. These are keywords associated with your brand. It seems pretty obvious that these keywords are important; however, many people don’t think they need to pay attention to these keywords, because they’re already tied to the site. Not true. Brand keywords are just as essential as the second type, generic keywords.

Generic keywords are all the other keywords that are not directly associated with your company brand. So if your web site, TeenFashions.com, sells teen clothing, then keywords such as clothing,tank tops, cargo pants, and bathing suits might be generic keywords that you could use on your site.Going back to brand keywords for a moment, if you don’t use the keywords contained in your business

name, business description, and specific category of business, you’re missing out. It seems as if

it wouldn’t be necessary to use these keywords, because they’re already associated with your site, but

if you don’t own them, who will? And if someone else owns them, how will they use those keywords

to direct traffic away from your site?

Before we go too much further in this description of keywords and how to choose the right one,

you should know that keywords fall into two other categories: those keywords you pay a fee to

use (called pay-per-click), and those naturally occurring keywords that just seem to work for you

without the need to pay someone to ensure they appear in search results (these are called organic

keywords).

When you think about purchasing keywords, these fall into the pay-per-click category. When you

stumble upon a keyword that works, that falls into the organic category.

When you begin considering the keywords that you’ll use on your site, the best place to start brainstorming

is with keywords that apply to your business. Every business has its own set of buzzwords

that people think about when they think about that business or the products or services related to

the business. Start your brainstorming session with words and phrases that are broad in scope, but

may not bring great search results. Then narrow down your selections to more specific words and

phrases, which will bring highly targeted traffic. Table 4-1 shows how broad keywords compare to

specific key phrases. Chapter 5 contains more specifics about choosing the right keywords and key phrases. The

principal for choosing keywords is the same, whether the words you’re using are in PPC programs

or they occur organically, so all of the elements of keyword selection for both categories

are covered there.

When you’re considering the words that you’ll use for keywords, also consider phrases

of two to three words. Because key phrases can be much more specific than single words,

it’s easier to rank high with a key phrase than with a keyword. Key phrases are used in the same ways

as keywords, they’re just longer.

Tuesday, September 21, 2010

Using Anchor Text

Using Anchor Text

Anchor text — the linked text that is often included on web sites — is another of those keyword anomalies that you should understand. Anchor text, shown in Figure 4-1, usually appears as an underlined or alternately colored word (usually blue) on a web page that links to another page, either inside the same web site or on a different web site.

What’s important about anchor text is that it allows you to get double mileage from your keywords.

When a search engine crawler reads the anchor text on your site, it sees the links that are embedded

in the text. Those links tell the crawler what your site is all about. So, if you’re using your

keywords in your anchor text (and you should be), you’re going to be hitting both the keyword

ranking and the anchor text ranking for the keywords that you’ve selected.

Of course, there are always exceptions to the rule. In fact, everything in SEO has these, and with

anchor text the exception is that you can over-optimize your site, which might cause search engines to reduce your ranking or even block you from the search results altogether. Over-optimization

occurs when all the anchor text on your web site is exactly the same as your keywords, but there is

no variation or use of related terminology in the anchor text.

Sometimes, web-site owners will intentionally include only a word or a phrase in all their anchor

text with the specific intent of ranking high on a Google search. It’s usually an obscure word or phrase

that not everyone is using, and ranking highly gives them the ability to say they rank number one for

whatever topic their site covers. It’s not really true, but it’s also not really a lie. This is called Google

bombing. However, Google has caught on to this practice and has introduced a new algorithm that

reduces the number of false rankings that are accomplished by using anchor text in this way.

The other half of anchor text is the links that are actually embedded in the keywords and phrases

used on the web page. Those links are equally as important as the text to which they are anchored.

The crawler will follow the links as part of crawling your site. If they lead to related web sites, your

ranking will be higher than if the links lead to completely unrelated web sites.

These links can also lead to other pages within your own web site, as you may have seen anchor text

in blog entries do. The blog writer uses anchor text, containing keywords, to link back to previous

posts or articles elsewhere on the site. And one other place that you may find anchor text is in your

site map. This was covered in Chapter 3, but as a brief reminder, naming your pages using keywords

when possible will help improve your site rankings. Then to have those page names (which are keywords)

on your site map is another way to boost your rankings and thus your traffic — remember

that a site map is a representation of your site with each page listed as a name, linked to that page.

Anchor text seems completely unrelated to keywords, but in truth, it’s very closely related. When

used properly in combination with your keywords, your anchor text can help you achieve a much

higher search engine ranking.

Anchor text — the linked text that is often included on web sites — is another of those keyword anomalies that you should understand. Anchor text, shown in Figure 4-1, usually appears as an underlined or alternately colored word (usually blue) on a web page that links to another page, either inside the same web site or on a different web site.

What’s important about anchor text is that it allows you to get double mileage from your keywords.

When a search engine crawler reads the anchor text on your site, it sees the links that are embedded

in the text. Those links tell the crawler what your site is all about. So, if you’re using your

keywords in your anchor text (and you should be), you’re going to be hitting both the keyword

ranking and the anchor text ranking for the keywords that you’ve selected.

Of course, there are always exceptions to the rule. In fact, everything in SEO has these, and with

anchor text the exception is that you can over-optimize your site, which might cause search engines to reduce your ranking or even block you from the search results altogether. Over-optimization

occurs when all the anchor text on your web site is exactly the same as your keywords, but there is

no variation or use of related terminology in the anchor text.

Sometimes, web-site owners will intentionally include only a word or a phrase in all their anchor

text with the specific intent of ranking high on a Google search. It’s usually an obscure word or phrase

that not everyone is using, and ranking highly gives them the ability to say they rank number one for

whatever topic their site covers. It’s not really true, but it’s also not really a lie. This is called Google

bombing. However, Google has caught on to this practice and has introduced a new algorithm that

reduces the number of false rankings that are accomplished by using anchor text in this way.

The other half of anchor text is the links that are actually embedded in the keywords and phrases

used on the web page. Those links are equally as important as the text to which they are anchored.

The crawler will follow the links as part of crawling your site. If they lead to related web sites, your

ranking will be higher than if the links lead to completely unrelated web sites.

These links can also lead to other pages within your own web site, as you may have seen anchor text

in blog entries do. The blog writer uses anchor text, containing keywords, to link back to previous

posts or articles elsewhere on the site. And one other place that you may find anchor text is in your

site map. This was covered in Chapter 3, but as a brief reminder, naming your pages using keywords

when possible will help improve your site rankings. Then to have those page names (which are keywords)

on your site map is another way to boost your rankings and thus your traffic — remember

that a site map is a representation of your site with each page listed as a name, linked to that page.

Anchor text seems completely unrelated to keywords, but in truth, it’s very closely related. When

used properly in combination with your keywords, your anchor text can help you achieve a much

higher search engine ranking.

Monday, September 20, 2010

The Ever-Elusive Algorithm

The Ever-Elusive Algorithm

One element of search marketing that has many people scratching their head in confusion is the

algorithms that actually determine what the rank of a page should be. These algorithms are proprietary

in nature, and so few people outside the search engine companies have seen them in their

entirety. Even if you were to see the algorithm, you’d have to be a math wizard to understand it. And

that’s what makes figuring out the whole concept of optimizing for search engines so difficult.

To put it as plainly as possible, the algorithm that a search engine uses establishes a baseline to

which all web pages are compared. The baseline varies from search engine to search engine. For

example, more than 200 factors are used to establish a baseline in the Google algorithm. And

though people have figured out some of the primary parts of the algorithm, there’s just no way to

know all of the parts, especially when you realize that Google makes about half a dozen changes to

that algorithm each week. Some of those changes are major, others are minor. But all make the algorithm

a dynamic force to be reckoned with.

Knowing that, when creating your web site (or updating it for SEO), you can keep a few design principles

in mind. And the most important of those principles is to design your web site for people, not

for search engines. So if you’re building a site about springtime vacations, you’ll want to include

information and links to help users plan their springtime vacations.

Then if a crawler examines your site and it contains links to airfare sites, festival sites, garden shows,

and other related sites, the crawler can follow these links, using the algorithm to determine if they are

related, and your site ranks higher than if all the links lead to completely unrelated sites. (If they do, that

tells the crawler you’ve set up a bogus link farm, and it will either rank your site very low or not at all.)

The magic number of how many links must be related and how many can be unrelated is just that, a

magic number. Presumably, however, if you design a web page about springtime vacations and it’s

legitimate, all the links from that page (or to the page) will be related in some way or another. The

exception might be advertisements, which are clearly marked as advertisements. Another exception

is if all your links are advertisements that lead to someplace unrelated to the topic (represented by

keywords) at hand. You probably wouldn’t want to have a site that only had links from advertisements,

though, because this would likely decrease your search engine ranking.

The same is true of keywords. Some search engines prefer that you use a higher keyword density

than others. For all search engines, content is important, but the factors that determine whether or

not the content helps or hurts your ranking differ from one search engine to another. And then there

are meta tags, which are also weighted differently by search engines.

So this mysterious baseline that we’re talking about will vary from search engine to search engine.

Some search engines look more closely at links than others do, some look at keywords and context,

some look at meta data, but most combine more than one of those elements in some magic ratio that

is completely proprietary.

What that means for you is that if you design your web site for search engines, you’ll always be playing

a vicious game of cat and mouse. But if you design your web site for people, and make the site

as useful as possible for the people who will visit the site, you’ll probably always remain in all of the

search engines’ good graces.

Of course, most of these heuristics apply more specifically to web-site design and less specifically to

keywords and SEO. However, because SEO really should be part of overall site usability, these are

important principles to keep in mind when you’re designing your web site and implementing your

keyword strategies. As mentioned previously, don’t design your web site for SEO. Instead, build it

for users, with SEO as an added strategy for gaining exposure. Always keep the user in mind first,

though. Because if users won’t come to your site, or won’t stay on your site once they’re there, there’s

no point in all the SEO efforts you’re putting into it.

One element of search marketing that has many people scratching their head in confusion is the

algorithms that actually determine what the rank of a page should be. These algorithms are proprietary

in nature, and so few people outside the search engine companies have seen them in their

entirety. Even if you were to see the algorithm, you’d have to be a math wizard to understand it. And